Blog Post

SEO

Nadine

Wolff

published on:

11.01.2019

Crawling – The spider on the move on your website

Table of Contents

This article provides an overview of what this "crawling" actually is and what the difference is between it and Google indexing. You will also get to know a small selection of web crawlers and receive a brief insight into their focuses.

You will also learn about the work and control of the Google Crawler in this article, as crawling can be controlled with some simple tricks.

The term "crawling" is a fundamental technical term in search engine optimization.

The two terms "Crawling" and "Indexing" are often confused or mixed up.

Essentially, these two terms are so relevant that the entire web world depends on them.

What crawlers are there?

A web crawler (also known as an ant, bot, web spider, or web robot) is an automated program or script that automatically searches web pages for very specific information. This process is called web crawling or spidering.

There are various uses for web crawlers. Essentially, web crawlers are used to collect & retrieve data from the Internet. Most search engines use it as a means to provide current data and to find the latest information on the Internet (e.g., indexing on Google search results pages). Analysis companies and market researchers use web crawlers to determine customer and market trends. Below, we present some well-known web crawlers specifically for the SEO area:

ahref - ahrefs is a well-known SEO tool and provides very concrete data in the area of backlinks and keywords.

semrush - an all-in-one marketing software designed exclusively for SEO, social media, traffic, and content research.

Screaming Frog - is an SEO spider tool available as downloadable software for Mac OS, Windows, and Ubuntu. It is available as a free and paid version.

Crawling vs. Indexing

Crawling and indexing are two different things, this is often misunderstood in the SEO area. Crawling means that the bot (e.g., the Googlebot) views and analyzes all the content (which can be texts, images, or CSS files) on the page. Indexing means that the page can be displayed in the Google search results. One cannot go without the other.

Imagine you are walking down a large hotel hallway, with closed doors on either side of you. Accompanying you is someone like a travel companion, who in this case is the Googlebot.

If Google is allowed to search a page (a room), it can open the door and actually see what's inside (crawl).

A door may have a sign indicating that the Googlebot is allowed to enter the room and show the room to other people (you) (indexing is possible, the page is shown in search results).

The sign on the door could also mean that it is not allowed to show the room to people (“noindex”). The page was crawled as it could look inside, but not displayed in the search results as it is instructed not to show the room to people.

If a page is blocked for the crawler (e.g., a sign on the door stating "Google is not allowed in here"), it will not go in and look around. So it risks no peeking into the room, but it shows the room to people (index) and tells them that they can go in if they want.

Even if there is an instruction inside the room telling it not to let people enter the room (“noindex” meta tag), it will never see it since it was not allowed to enter.

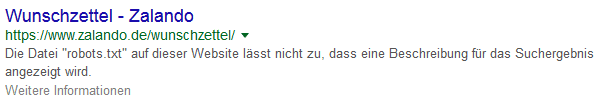

Blocking a page through robots.txt means it is eligible for indexing, regardless of whether you have a meta robots tag "index" or "noindex" in the page itself (since Google cannot see it due to being blocked), so it is treated as indexable by default. Of course, this means that the ranking potential of the page is reduced (since the content of the page cannot really be analyzed). If you have ever seen a search result where the description reads something like "The description of this page is unavailable because of robots.txt," this is the reason.

[caption id="attachment_23214" align="aligncenter" width="607"]

Google search results page with a blocked description due to robots.txt[/caption]

Google Crawler – he came, he saw, and he indexed

The Googlebot is Google's search bot that crawls the web and creates an index. It is also known as a spider. The bot crawls every page it has access to and adds it to the index, where it can be retrieved and returned to search queries by users.

In the SEO area, a distinction is made between classic search engine optimization and Googlebot optimization. The Googlebot spends more time browsing websites with significant PageRank. The PageRank is an algorithm from Google that basically analyzes and weighs the linking structure of a domain. The time that the Googlebot provides to your website is referred to as "crawling budget." The greater the "authority" of a page, the more "crawling budget" the website receives.

In a Googlebot article from Google, it states: “In most cases, the Googlebot accesses your website on average only once every few seconds. Due to network delays, the frequency may appear higher over short periods." In other words, your website is always being crawled, provided your website accepts crawlers. In the SEO world, there is much discussion about the “crawling rate” and how to get Google to recrawl your webpage for optimal ranking. The Googlebot constantly browses your webpage. The more relevancy, backlinks, comments, etc., there are, the more likely it is that your webpage will appear in the search results. Note that the Googlebot does not constantly crawl all pages of your website. In this context, we want to point out the importance of current and good content - fresh, consistent content always attracts the crawler's attention and increases the chances of top pages being ranked.

The Googlebot first accesses a website's "robots.txt" file to query the rules for crawling the website. Non-approved pages are usually not crawled or indexed by Google.

Google's crawler uses the sitemap.xml to determine all areas of the site that are to be crawled and rewarded with a Google indexation. Due to the differing ways websites are created and organized, the crawler may not automatically search every page or section. Dynamic content, low-rated pages, or large content archives with low internal linking might benefit from a well-crafted sitemap. Sitemaps are also useful for informing Google about the metadata behind videos, images, or, for example, PDF files. Provided the sitemaps use these sometimes optional annotations. If you want to learn more about building a sitemap, read the blog article on “the perfect sitemap.”

Controlling the Google Bot to get your website indexed is no secret. With simple means such as a good robots.txt and internal linking, a lot can be achieved and crawling can be influenced.

Do you have few pages allowing Google indexing? Contact us. We assist you with the strategy and the technical implementation.

What can we do for you?

Do you want to ensure that your website is crawled correctly? We are happy to advise you on the topic of search engine optimization!

We look forward to your inquiry.

Nadine

Wolff

As a long-time expert in SEO (and web analytics), Nadine Wolff has been working with internetwarriors since 2015. She leads the SEO & Web Analytics team and is passionate about all the (sometimes quirky) innovations from Google and the other major search engines. In the SEO field, Nadine has published articles in Website Boosting and looks forward to professional workshops and sustainable organic exchanges.