Blog Post

SEO

Axel

Vortex

published on:

08.08.2011

Google Webmaster Tools: Exclude URL Parameters

Table of Contents

Websites with session IDs, sorting functions, or other parameters in the URL are often not optimally crawled and indexed by Google. Identical content is often found under different URLs, which is a classic duplicate content problem. Additionally, the Googlebot might index a large number of identical pages and overlook other, more important pages. The canonical tag can be used to communicate the preferred page to Google. In the Webmaster Tools, there has been a new feature for several weeks that allows the importance of different parameters to be communicated to Google.

When the Googlebot finds different URLs with identical content, these pages are generally clustered. The best-fitting URL of the cluster is automatically selected for the search engine. With the new feature in Webmaster Tools, website owners who are familiar with the parameters on their site now have the opportunity to intervene in this process and specify whether the parameter affects the page content and how URLs with these parameters should be crawled.

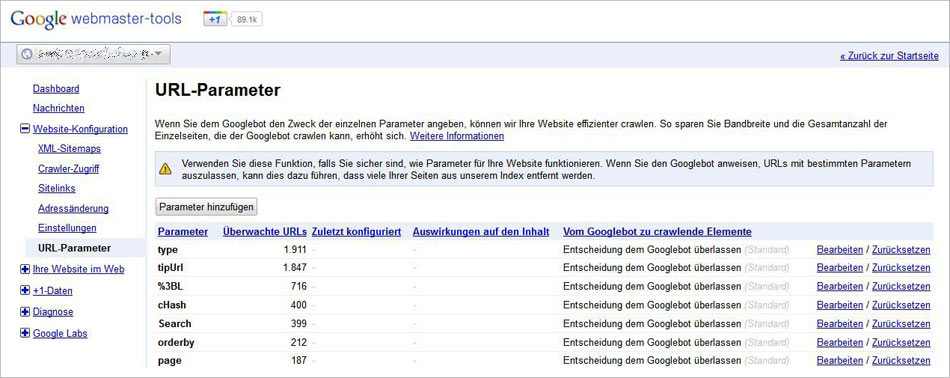

The new feature is found under the site configuration section and is called "URL Parameters". Here, all found parameters are listed. In the default setting, the decision on how to deal with the respective parameter is left to Googlebot. However, each parameter can be edited, and new parameters can be added.

Caution is definitely advised when editing parameters. A prerequisite for using the function is precise knowledge of the individual parameters on your own site. Incorrect application may exclude important areas of the website from being indexed.

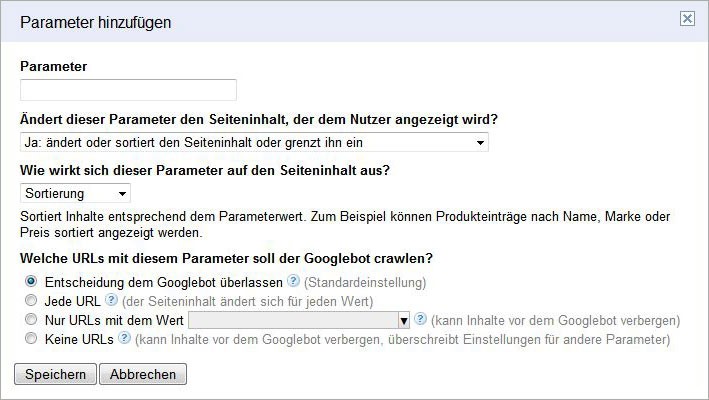

Basically, there are two possibilities: does a parameter change the content of a page by sorting, translating, restricting or otherwise adjusting content, or does it have no impact on the content? If it has no influence (for example, because it is a session ID), no further settings are necessary. The Googlebot will only crawl one representative URL from a group of URLs that differ only by this one parameter. If a page’s content changes (for example, through parameters like sort, page, or lang for sorting, page number, or language), the Googlebot can be informed about how to proceed with the URLs. By default, the decision is left to Googlebot. Alternatively, it can be chosen that each URL, only URLs with a specific value, or no URLs with this parameter should be crawled at all. In the latter case, exclusion via robots.txt is preferable. Conveniently, all parameters with their settings can be downloaded as a table for detailed examination.

Regardless of the use of rel=canonical, the new feature is a good way to optimize the site’s indexing and guide the Googlebot optimally through your own domain.

Axel

Vortex

Axel Zawierucha is a successful businessman and an internet expert. He began his career in journalism at some of Germany's leading media companies. As early as the 1990s, Zawierucha recognized the importance of the internet and moved on to become a marketing director at the first digital companies, eventually founding internetwarriors GmbH in 2001. For 20 years – which is an eternity in digital terms! – the WARRIORS have been a top choice in Germany for comprehensive online marketing. Their rallying cry then and now is "We fight for every click and lead!"