Blog Post

SEO

Julien

Moritz

published on:

13.02.2026

Optimizing content specifically for prompts using the Query Fan-Out principle

Table of Contents

Large Language Models (LLMs) like ChatGPT, Claude, or Gemini are fundamentally changing how content is found, evaluated, and utilized. Visibility is no longer solely achieved through traditional search queries but increasingly through prompts that users input into AI systems.

A frequently mentioned principle for optimizing one's content in this regard is the so-called Query Fan-Out principle. But what does this specifically mean for your content? In this article, you'll learn how ChatGPT & Co. decompose inquiries in the background and how you can structure your content so that it is relevant, comprehensible, and quotable for LLMs.

Key Points at a Glance

LLMs generate multiple search queries simultaneously from a prompt (Query Fan-Out).

These queries often run parallel in both German and English.

Content is evaluated based on topics, entities, terms, and synonyms.

In just a few steps, you can analyze which queries ChatGPT uses yourself. We show you how here.

Concrete requirements for your content structure can be derived from this.

What is Query Fan-Out?

Query Fan-Out describes the process where an LLM generates multiple sub-queries from a single prompt. A prompt is thus unfolded into multiple queries. This multitude of queries is called Fan-Out because a query fans out like a fan into many individual queries. In the background, the system sends various search queries simultaneously to the index (e.g., Bing or Google). It is only from the synthesis of selected results that the AI compiles the final answer.

We will examine how you can easily investigate this yourself for a prompt in a step-by-step guide.

Why is Query Fan-Out so important?

Your content aims to be found. However, Large Language Models are increasingly used today. This changes the requirements for your content so that it continues to appear in Google search results but is also used by as many LLMs as possible for answer generation.

The better your content matches the generated queries, the more likely it is to be used by LLMs as a source.

Step-by-Step Guide: What Queries are Created by ChatGPT?

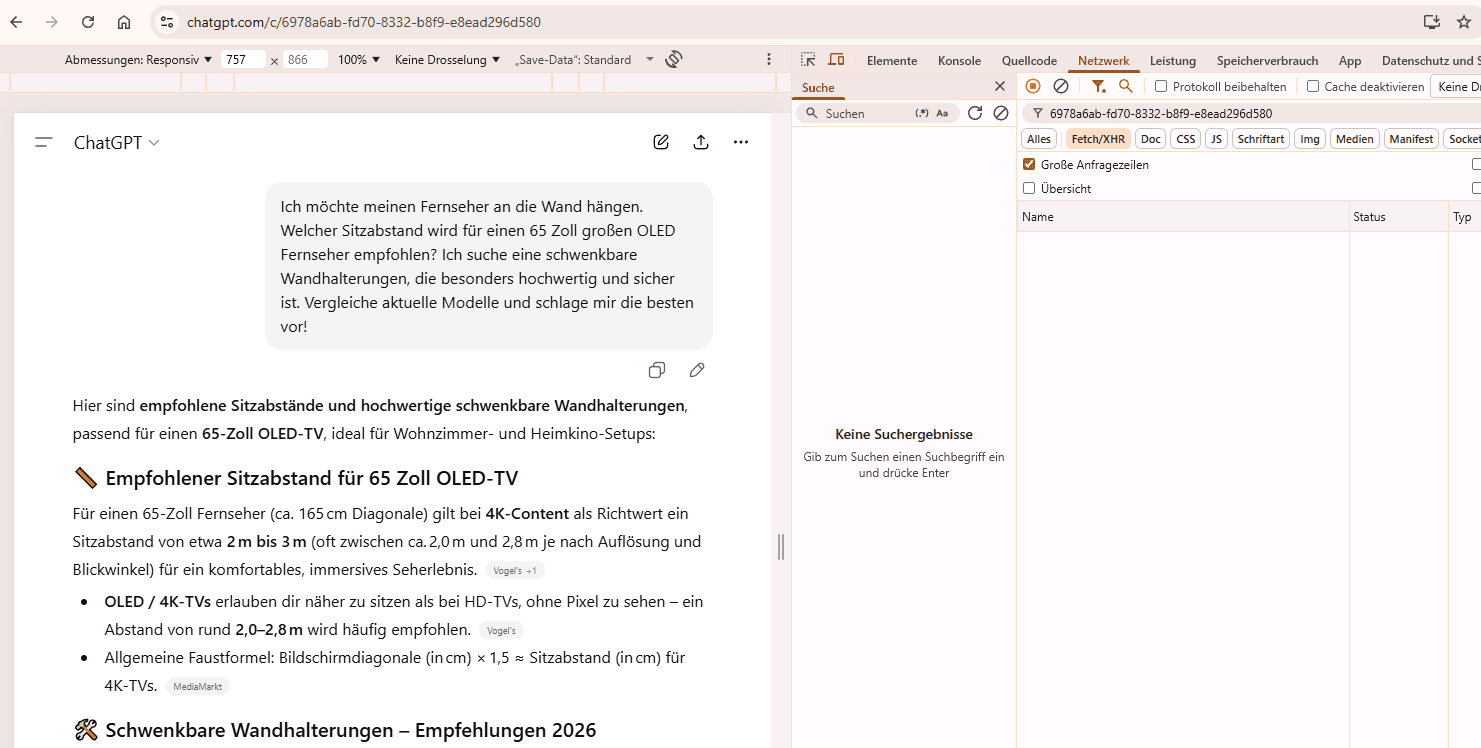

With a sample prompt in ChatGPT, one can clearly see how these queries appear. You can easily recreate this for your own prompts and optimize your content accordingly.

Step 1: Open Developer Tools

Open ChatGPT in the browser

Enter a prompt and submit it

Right-click somewhere in the interface

Select “Inspect”

Step 2: Filter Network Tab & Search for Chat-ID

Switch to the “Network” tab

Filter by Fetch / XHR

Copy the chat-ID from the last part of the URL

Paste it into the search field

Reload the page

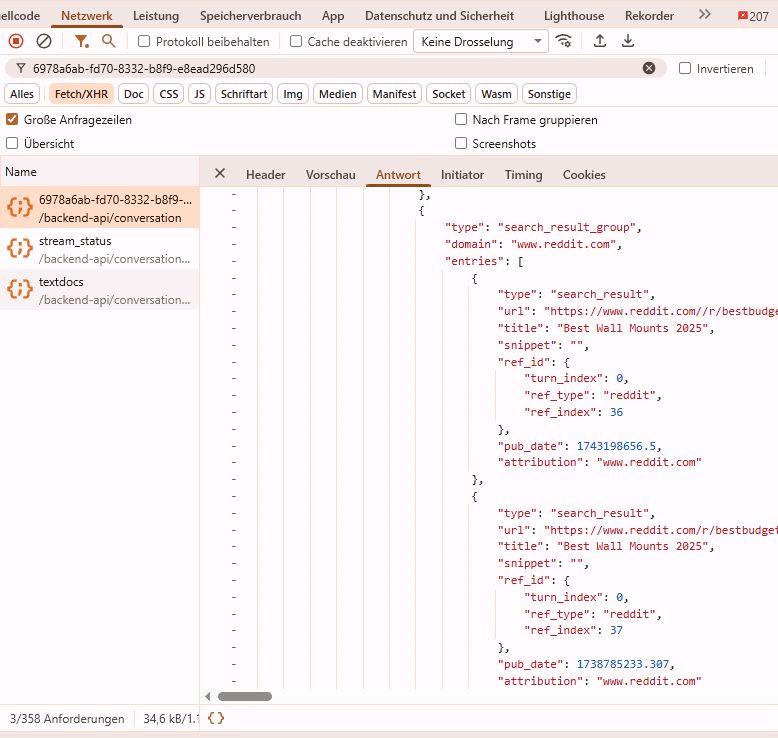

Step 3: Select Network Request

Click on the network request with the chat-ID in the name

Switch to the “Response” tab

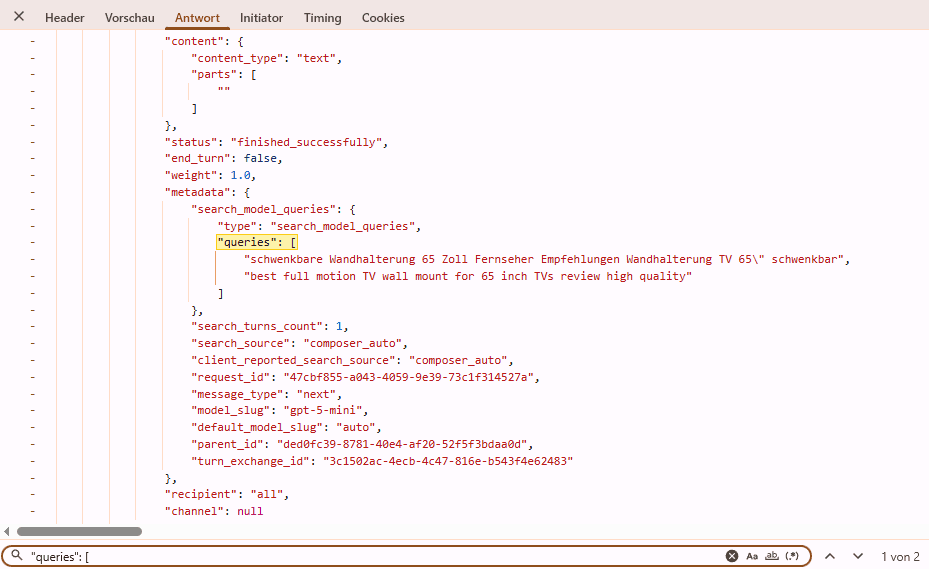

Step 4: Find Queries

Search for the term “queries”

Now you see specific search queries that ChatGPT uses for web search

Mostly in German and English

Step 5: Evaluation of Requests

The following prompt was entered:

“I want to mount my TV on the wall. What is the recommended seat distance for a 65-inch OLED TV? I'm looking for a high-quality and safe full-motion wall mount. Compare current models and suggest the best ones!”

ChatGPT utilizes two sub-queries in the web search to find suitable content:

1. DE: “pivotable wall mount 65 inch TV recommendations wall mount TV 65" pivotable”

1. EN: “best full motion TV wall mount for 65 inch TVs review high quality”

2. DE: “Recommended seat distance 65 inch TV distance OLED TV seat distance”

2. EN: “what is recommended viewing distance for 65 inch TV”

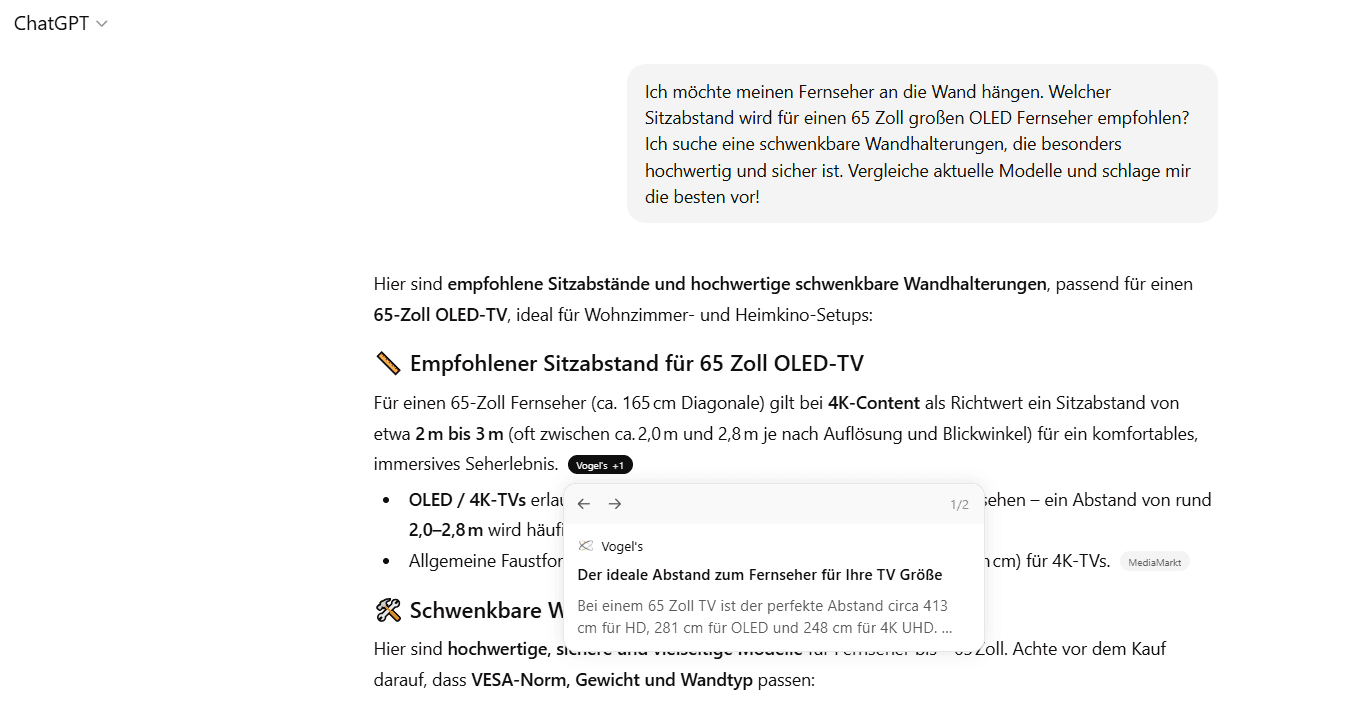

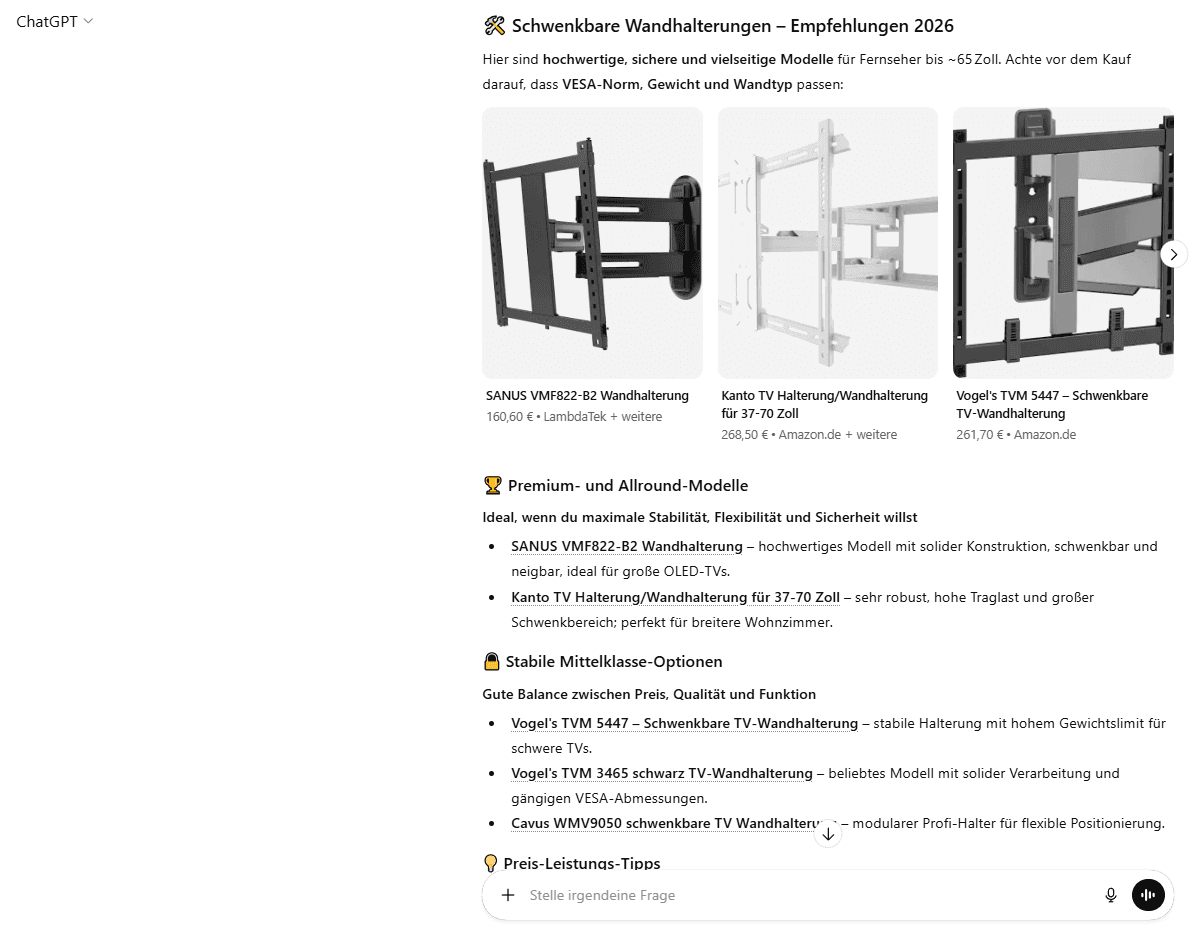

From these queries, ChatGPT searches for appropriate sources and subsequently generates the following answer, with source indication:

Now you should carefully look at the queries and also the sources used. What types of content are cited?

The example used clearly shows that there is an information cluster and a comparison cluster. Different sources are used for these clusters. To be optimally found for this prompt, you need an informative article on the topic “Recommended viewing distance to TV”. From ChatGPT's query, it can be derived that the subtopics: TV size in inches and display types (e.g., OLED) should be addressed. Additionally, the synonym TV viewing distance should appear in the content, preferably in an H2.

The product selection comes from other articles. Thus, your products should appear in as many comparison articles (on external websites) on the topic “Best TV wall mount” as possible, so they can be presented here. Additionally, ChatGPT accesses manufacturer websites. With your own content on product and category pages, you can influence the answers of LLMs. Clearly consider what makes your product or service unique and how you stand out from competitors. Because exactly these advantages can bring users from the AI chat to your own website.

Additionally, it can also be beneficial to publish your own comparison articles. Naturally, you should strongly present your own brand within these, but also mention competitors and their advantages.

LLMs recognize that the information density in the English-speaking network is generally higher. Translating your own content can therefore be a great advantage and ensure greater visibility with ChatGPT and others.

Strategies for Optimization for the Query Fan-Out Principle

What does the Query Fan-Out principle mean for your own content? You need an SEO strategy that works even in the age of generative AI. For this, we have developed five tips that you can directly implement.

1. Comprehensive Topic Clusters Instead of Keyword Focus

The Google Query Fan-Out behavior shows the desire to capture topics in their entirety. LLMs divide a prompt into multiple thematic clusters with varying intent, such as information, comparison, or product queries.

Informative content should be built comprehensively. Content should not only answer "What" questions but also "How", "Why", and "What are the alternatives?" Use targeted synonyms and related entities. If you write about “TV wall mounts”, terms like "VESA", "Pivotable", and "OLED television" should be included.

2. Direct Answers

Write precise definitions and direct answers to user questions at the beginning of your paragraphs. An AI looking for a quick answer to a sub-query will more likely cite text that provides a clear answer: “The ideal viewing distance for a 65-inch OLED TV is about 2.50 to 2.80 meters.” Avoid unnecessary filler sentences just to include keywords.

Further detailed and extensive information considering secondary keywords can be placed afterward.

3. Structured Data

LLMs work resource-efficiently and love structure. When an AI conducts a price comparison or technical analysis, it preferably accesses data marked up with Schema.org. Use structured data in JSON-LD format to make products, FAQs, and reviews machine-readable.

4. Internationally Visible Content

Often, Large Language Models automatically generate English-language queries, even when prompts are written in German. Therefore, building internationally visible content is increasingly important, even if your target audience is German-speaking. You should provide your core content in English as well.

5. Building "External" Visibility

Transactional inquiries like “Best price-performance TV wall mount 2026” are answered using comparison content and user reviews. To be visible with your brand in LLMs, you need to build recognition. Content partnerships with magazines or collaborations with influencers who publish independent reports and product comparisons are a strong lever. It’s not just about classic backlinks that provide authority but also about mentions of the brand in a relevant context on as many platforms as possible. This can be articles from magazines, competitors, online retailers as well as UGC content on YouTube, Reddit, etc.

Conclusion: SEO & GEO United

Query Fan-Out reveals how LLMs find and evaluate content. By structuring your content to answer multiple questions simultaneously, being thematically complete, and considering relevant entities as well as synonyms, you optimize not only for traditional search engines but deliberately for AI systems. This is where a new form of visibility is currently being created. Optimization for the Query Fan-Out principle is no longer a "nice-to-have", but the new foundation for digital visibility. By understanding how LLMs deconstruct queries, you can create content that is not only found but also cited as a trustworthy source.

If you need assistance or want to optimize your content specifically for LLMs, our SEO/GEO team can gladly advise you. Contact us now!

Julien

Moritz

Since October 2023, Julien Moritz has been bringing fresh energy to the internetwarriors' SEO team. With his strong interest in online marketing and passion for SEO strategies, he helps businesses enhance their digital presence. His determined and solution-oriented approach enables internetwarriors' clients to achieve their goals.

Comments on the post

no comments yet

Write a comment

Your email address will not be published. Required fields are marked with *